Technology

9 min read

Machine Learning Revolutionizes Prosthetic Hands with Smarter Grip

AIP Publishing LLC

January 20, 2026•2 days ago

AI-Generated SummaryAuto-generated

Researchers developed a system for prosthetic hands that uses machine learning, a camera, and an EMG sensor. This technology aims to enable prosthetic hands to predict and apply appropriate grip strength in real-time for various objects. The goal is to create more natural and intuitive interaction, allowing users to focus on tasks rather than complex control.

Using machine learning, a camera, and a sensor, researchers improve the way prosthetic hands predict the required grip strength.

From the Journal: Nanotechnology and Precision Engineering

WASHINGTON, Jan. 20, 2026 — Holding an egg requires a gentle touch. Squeeze too hard, and you’ll make a mess. Opening a water bottle, on the other hand, needs a little more grip strength.

According to the U.S. Centers for Disease Control and Prevention, there are approximately 50,000 new amputations in the United States each year. The loss of a hand can be particularly debilitating, affecting patients’ ability to perform standard daily tasks. One of the primary challenges with prosthetic hands is the ability to properly tune the appropriate grip based on the object being handled.

In Nanotechnology and Precision Engineering, by AIP Publishing, researchers from Guilin University of Electronic Technology, in China, developed an object identification system for prosthetic hands to guide appropriate grip strength decisions in real time.

“We want to free the user from thinking about how to control [an object] and allow them to focus on what they want to do, achieving a truly natural and intuitive interaction,” said author Hua Li.

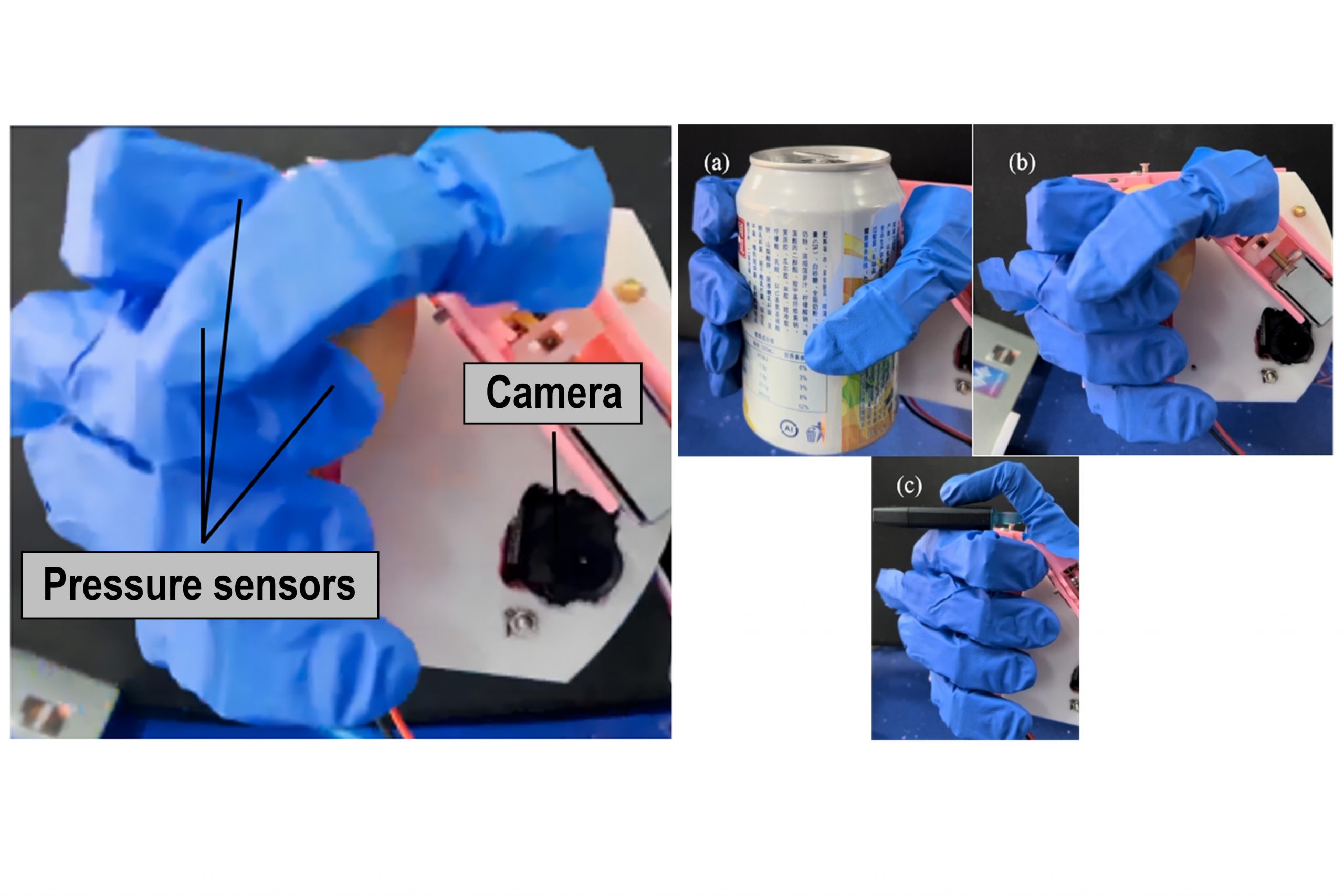

Pens, cups and bottles, balls, metal sheet objects like keys, and fragile objects like eggs make up over 90% of the types of items disabled patients use daily. The researchers measured the grip strength needed to interact with these common items and fed these measurements into a machine learning-based object identification system that uses a small camera placed near the palm of the prosthetic hand.

Their system uses an electromyography (EMG) sensor at the user’s forearm to determine what the user intends to do with the object at hand.

“An EMG signal can clearly convey the intent to grasp, but it struggles to answer the critical question, how much force is needed? This often requires complex training or user calibration,” said Li. “Our approach was to offload that ‘how much’ question to the vision system.”

The group plans to integrate haptic feedback into their system, providing an intuitive physical sensation to the user, which can establish a two-way communication bridge between the user and the hand using additional EMG signals.

“What we are most looking forward to, and currently focused on, is enabling users with prosthetic hands to seamlessly and reliably perform the fine motor tasks of daily living,” said Li. “We hope to see users be able to effortlessly tie their shoelaces or button a shirt, confidently pick up an egg or a glass of water without consciously calculating the force, and naturally peel a piece of fruit or pass a plate to a family member.”

###

Article Title

Design of intelligent artificial limb hand with force control based on machine vision

Authors

Yao Li, Xiaoxia Du, and Hua Li

Author Affiliations

Guilin University of Electronic Technology

Rate this article

Login to rate this article

Comments

Please login to comment

No comments yet. Be the first to comment!